Background

I’ve spent the last few months excitedly building products. Recent advancements in deep learning, and Gen-AI in particular, have given me a new optimism for what’s possible and how quickly and effectively it can be built. It’s been exhilarating to build quick prototypes on top of foundation models and get magical results. However, its surprisingly difficult to get from a interesting prototype to genuinely delightful product. It seems like the rigour that’s traditionally associated with applying a deep learning model is nascent when building products with Gen-AI. So I was excited to come across DSPy, it’s “programming over prompting” approach, and the potential to make a more robust product experience on top of Gen-AI. In this post I’ll walk through how I used DSPy to rebuild the event ingestion part of an old project.

Many years ago, before deep learning became the state of the art for natural language processing, I built an event listing product. It was a comprehensive music event listing for Dublin and other cities. At the time there were already a lot of information online about music events in Dublin but it was spread across a variety of silos, from venue listings, promoter listings, news site to blogs. The product worked by ingesting events from a variety of these unstructured sources, extracting the relevant information, reconciling duplicates and ambiguities and storing structured information in a database. I used NER with NLTK to extract information about the artists, venues, genres, dates etc. It worked well but was essentially a big bunch of rules that were disappointingly specific to particular event sources and consequently brittle to maintain and extend. Disappointing in the sense that the time taken to code the rules was so much longer than human intuition would take to parse the information.

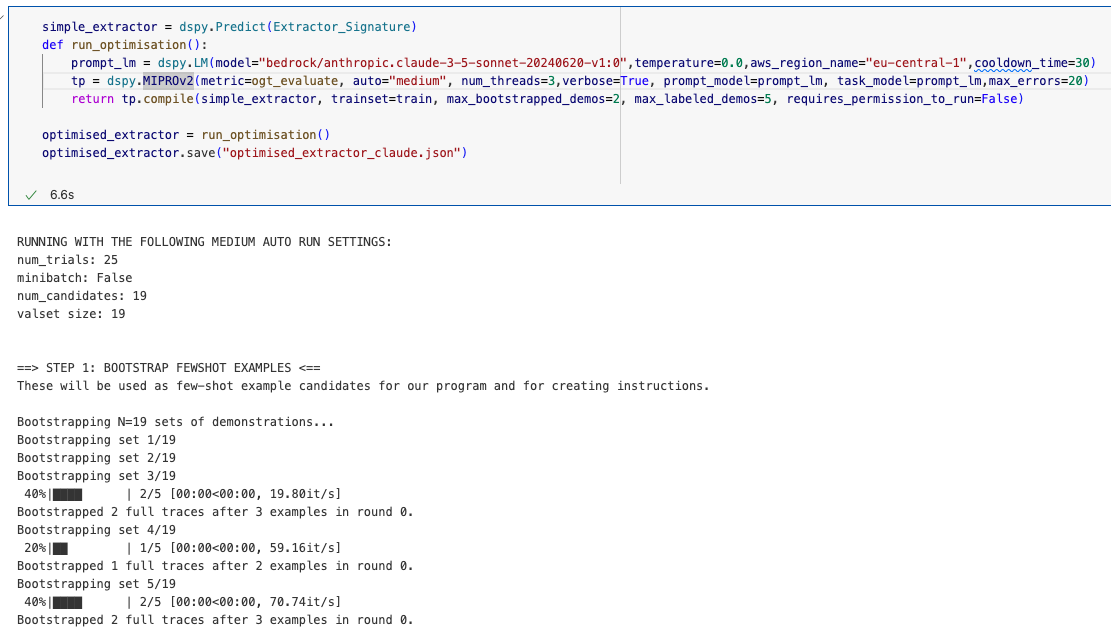

When I read a bit more about DSPy I was reminded of the excellent Deep Learning for Coders course from fast.ai and that course’s approach to deep learning. In particular the approach from lesson one to bootstrapping a dataset from the internet in order to fine tune a model to answer a particular question. So I thought I’d try the same pragmatic approach with DSPy. In the linked notebook I attempt to use DSPy to ingest music events from a variety of sources and extract structured information.

Prerequisites:

- OpenAI API key; it’s quick to get up and running

- Langfuse API keys; DSPy can be hard to debug so Langfuse is useful for tracing and logging

- Claude on bedrock; just to compare a different LLM, any LLMLite supported model should be simple to swap in

Let’s jump into the notebook

Continue from the events notebook in this repo.

Conclusion

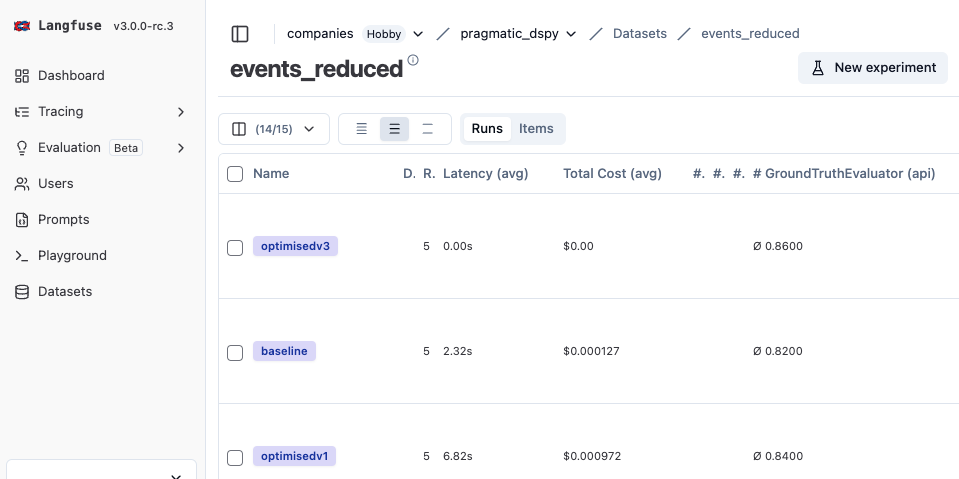

There is something very familiar and reassuring about DSPy. I guess it’s down to DSPy taking some design cues from Pytorch. It does feel a lot more like programming than tweaking prompts by hand. DSPy also seems to push you down the path of building and curating a dataset like a machine learning engineer. On the negative side it does abstract away interactions with the LLM and that makes it very difficult to debug when something does go wrong. However, that issue is mostly mitigated by using LLM observability tools like Langfuse.

Although the original product was mothballed many years ago it’s pretty obvious that the latest LLMs easily out perform the original products rules based approach. With DSPy it’s easy to see how to bootstrap a even better performing and more maintainable alternative.

Overall I’m excited to build product with DSPy but I’ll be trying to automate the management of datasets and tuning of prompts as much as possible.

Note: Be careful scraping websites, you should check the T&Cs and robots.txt of the website beforehand.